Mobile App Development: Creating a Bar Code Scanner for iOS 7

Bar codes are everywhere and you're probably more than familiar with them and the more recent QR codes. Even though many mobile apps read them. In the past, an iOS Mobile App Developer who wanted to decode a QR code, or any bar code, would either need to write their own decoder or use a third party library like ZBar.

But something you probably didn't know is that iOS 7 has a bar code reader built into their API's and you can use it by utilizing the AVFoundation framework. Let me show you how to do it!

The Setup

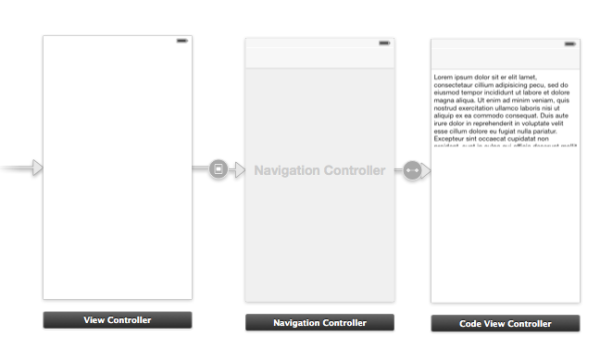

We are going to build a very simple app with just 2 view controllers and one storyboard. here's how I've set it up:

As you can see we have our main view controller (called ViewController) and another view controller that shows the decoded code (called CodeViewController).

We also setup a segue called CodeViewSegue from our main view controller to the CodeViewController, using the modal transition.

Notice that there are no UI elements that will trigger this segue. That's right, we are going to trigger it programmatically.

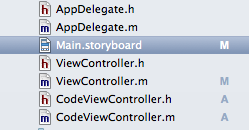

Our source list looks like this:

* Capturing Metadata

The trick in reading bar codes or QR codes lies in AVFoundation's ability to read metadata. You can tell the framework in what kind of metadata you are interested in and it will do the rest automatically.

In the ViewController class type the following:

- (void)viewDidLoad {

[super viewDidLoad];

// 1 mCaptureSession = [[AVCaptureSession alloc] init];

// 2 AVCaptureDevice *videoCaptureDevice = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeVideo]; NSError *error = nil;

// 3 AVCaptureDeviceInput *videoInput = [AVCaptureDeviceInput deviceInputWithDevice:videoCaptureDevice error:&error];

if ([mCaptureSession canAddInput:videoInput]) {

[mCaptureSession addInput:videoInput]; } else {

NSLog(@"Could not add video input: %@", [error localizedDescription]); }

// 4 AVCaptureMetadataOutput *metadataOutput = [[AVCaptureMetadataOutput alloc] init];

if ([mCaptureSession canAddOutput:metadataOutput]) {

[mCaptureSession addOutput:metadataOutput];

// 5 [metadataOutput setMetadataObjectsDelegate:self queue:dispatch_get_main_queue()]; [metadataOutput setMetadataObjectTypes:@[AVMetadataObjectTypeQRCode, AVMetadataObjectTypeEAN13Code]]; } else {

NSLog(@"Could not add metadata output."); }

// 6 AVCaptureVideoPreviewLayer *previewLayer = [[AVCaptureVideoPreviewLayer alloc] initWithSession:mCaptureSession]; previewLayer.frame = self.view.layer.bounds; [self.view.layer addSublayer:previewLayer];

// 7 [mCaptureSession startRunning]; } |

As usual, let's go over the code:

1. We've set a global capture session variable so we can trigger the camera when needed. Remember this as I will explain later on.

2. Since we want to capture video, so we've set up our capture device here.

3. It’s time to set our input and output. For our input we set it to be the capture device set in point 2 and add it to the capture session (if possible). It's always a good habit to check if you are allowed to do something before doing anything else instead of assuming it all works.

4. The output is an AVCaptureMetadataOutput object which, as you can tell by its name, is set to spit out metadata from whatever the camera sees. Again we should check if we can add it to the capture session and do so if we can.

5. This is the core of reading bar codes. Once the metadata output has been added successfully, we set its delegate to be ourselves and set which type of metadata we are interested in. There are a few types we can set here, but for this example we only set it to detect QR codes and EAN 13 codes, which are the most common type of bar codes.

6. We need to see what the camera is capturing, so we add a preview layer to out controller's view.

7. Finally, we start the session which also activates the camera.

With this code you just set up the camera to detect QR and EAN13 codes. But you haven't told the app what to do with those codes.

For this you need to implement a method from the AVCaptureMetadataOutputObjectsDelegate protocol, as follows:

- (void)captureOutput:(AVCaptureOutput *)captureOutput didOutputMetadataObjects:(NSArray *)metadataObjects fromConnection:(AVCaptureConnection *)connection {

// 1 if (mCode == nil) {

mCode = [[NSMutableString alloc] initWithString:@""]; }

// 2 [mCode setString:@""];

// 3 for (AVMetadataObject *metadataObject in metadataObjects) {

AVMetadataMachineReadableCodeObject *readableObject = (AVMetadataMachineReadableCodeObject *)metadataObject;

// 4 if([metadataObject.type isEqualToString:AVMetadataObjectTypeQRCode]) {

[mCode appendFormat:@"%@ (QR)", readableObject.stringValue]; } else if ([metadataObject.type isEqualToString:AVMetadataObjectTypeEAN13Code]) {

[mCode appendFormat:@"%@ (EAN 13)", readableObject.stringValue]; } }

// 5 if (![mCode isEqualToString:@""]) {

[self performSegueWithIdentifier:@"CodeViewSegue" sender:self]; } } |

1. mCode is an instance variable which is used later on. It's just text to be used with the CodeViewController's UITextView.

2. mCode is initialized to be an empty string.

3. We iterate through the metadata object that we find so that we can check with which type of metadata we are dealing with.

4. If we found a type of interest we change the value of mCode to something we can show to the user. The actual decoding of the bar code is stored in an object of type AVMetadataMachineReadableCodeObject. Just ask for its stringValue to see what the value is of the metadata

5. As mentioned above we are going to show the CodeViewController programmatically, this is where we do it. We tell the ViewController to perform the segue with the CodeViewSegue identifier, just as we called it in the setup above.

Now, this code alone won't work as expected. Can you guess what's wrong?

While the camera will detect a bar code (to speak in general terms) and it will also show the CodeViewController that something is missing.

That's right; we need to pass the value of the mCode variable to the CodeViewController.

This is easily done by implementing the following method:

- (void)prepareForSegue:(UIStoryboardSegue *)segue sender:(id)sender {

if ([segue.identifier isEqualToString:@"CodeViewSegue"]) {

UINavigationController *navController = (UINavigationController*)segue.destinationViewController; CodeViewController *controller = (CodeViewController*)navController.topViewController; controller.code = mCode; } } |

Remember from our storyboard that CodeViewController is embedded in a UINavigationController. To get to it we need to ask the navigation controller for its topViewController, which will be a controller of type CodeViewController.

Once we have the correct controller, we can set its code property to be the value of the mCode variable.

Now everything should be working correctly.

It would be a good idea to toggle the camera whenever ViewController becomes visible or not. You can do this by adding this to the ViewController class:

- (void)viewWillAppear:(BOOL)animated {

[super viewWillAppear:animated];

if ([mCaptureSession isRunning] == NO) [mCaptureSession startRunning]; }

- (void)viewWillDisappear:(BOOL)animated {

[super viewWillDisappear:animated];

if ([mCaptureSession isRunning]) [mCaptureSession stopRunning]; } |

As you can see, it's really not that hard to implement a reader for bar codes on your iPhone mobile app on iOS7. Since you are using AVFoundation, you can do much more that just displaying the data the code has.

Definitely check out the other types supported by the AVCaptureMetadataOutput class.

Just remember that this functionality is only available on iOS 7 and above. If you want to support older versions of iOS I would suggest you continue using libraries like ZBar.

As always, let me know if you have any questions and submit your comments, we're happy to read them!

About the Author

Jesus De Meyer has a Bachelor's degree in Multimedia and Communication Technology from the PIH University in Kortrijk, Belgium. He has 15 years of experience developing software applications for Mac and more than 5 years in iOS mobile app development. He currently works at iTexico as an iOS 7 Mobile App Developer and in his spare time he works on his own mobile apps.

Post Your Comment Here